Like most of you, I used to think of cross-browser testing as a necessary evil. Necessary, because I knew that I could not depend on each browser, device, operating system, and viewport size to render identically. And evil, because, in my experience, the effort to set up and run a bunch of identical tests on different devices and viewport sizes as only slightly less aggravating than scheduling dental surgery.

So, can you imagine someone actually looking forward to running all those tests? And, what’s more, actually being able to quickly spot and handle the differences across those platforms easily and effectively? I was skeptical that I could have that experience – and found that I could with Applitools Ultrafast Grid.

In this article, I will explain how I could check the behavior of one application across 29 different browsers, operating system, device, and viewport configurations in a mere 19 seconds – and uncover 119 visual differences across all those browser environments.

One Blog – Many Ways To Access It

I wrote my first blog post in 2010. Since then, I have published over 225 blogs on my personal blog – Essence Of Testing, not counting publishing on other blogs and books. Given all this, one would think that publishing is easy… but the reality is far from it. Each new post has to tell a story … which means different content with relevant images, tables, code snippets, etc. Yet styling needs to be consistent.

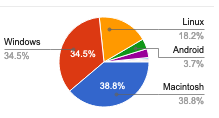

The analytics reports from my blog are pretty interesting.

The readers are coming from a variety of operating systems …

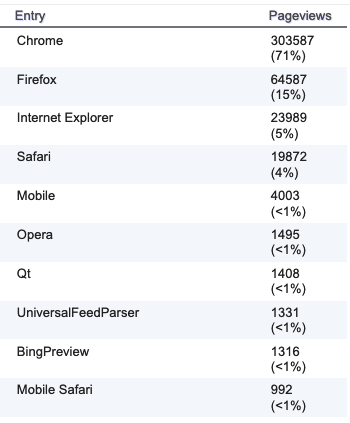

And using different browsers…

That said, the popular browsers seem to be Chrome, Firefox & Internet Explorer

The data above is important because I the look and feel of each post should be the same (and good) regardless of the OS / Browser combination and that there are no weird layout issues that cause difficulty in reading in a desktop browser as well as mobile web.

The aspect of validating the same functionality and the UI look-and-feel across different browsers is called Cross-Browser Testing.

To check this, I typically use Chrome Developer tools to emulate different devices and see how the page loads in different device sizes and layouts (portrait/landscape). I like to change the look and feel of my blog from time to time. This means when I change something common across my blog and want to check the impact on older published blog posts. This is all done manually – which takes a lot of time, and usually, I am unable to do all the due-diligence before publishing the change. I will admit, many a time, I do not make any changes to avoid this pain.

In the case of my blog, the impact of a bad publish is not very severe. However, if this was a B2B / B2C website, having potential user impact in using functionality, then there can be big brand and credibility loss, revenue loss, and the users could potentially find alternative and better solutions for the same.

This is where one needs to look at an automation solution that can help resolve these manual and error-prone activities. This blog post shows how I overcame this challenge.

The first part of the solution was to have some automated tests to ensure the blog loads consistently. I quickly wrote a few simple Java / TestNG / Selenium-WebDriver based tests for this aspect.

See the sample test here

Challenges of Functional Automation

Functional automation is inherently slow. It needs a deployed product with all pieces of the architecture available (or appropriately mocked) for the automated test scenarios to execute in order to mimic the end-user behavior with the product. In functional test automation, there is extra effort required to be taken to ensure these tests are stable, and give consistent results.

In order to achieve this, you need to choose your toolset carefully and build the automation solution with various factors in mind. This post on “Test Automation in the World of AI & ML” details the criteria to keep in mind for setting up a good Test Automation Framework.

The need for a vision!

The aspect that functional validation is unable to do, and hence is most done manually, is ensuring the UI / look-and-feel continues to be consistent and as expected with each incremental functional change in the product. This is one of the largest blockers to be able to release the software faster and with confidence. This is the challenge Applitools has solved in a very elegant, easy and accurate way.

To automate the validation of the look-and-feel, and integrate easily with your functional automation suite, I have used Applitools Eyes. Applitools has over 40 SDKs that can help you embed AI-based visual testing in your functional automation (along with other use cases). See this course on Automated Visual Testing: A Fast Path To Test Automation Success to learn how to use Applitools for Visual Validation by integrating with your existing Functional Automation.

See the sample test here.

Now I am able to run my tests on a variety of browsers and also do visual validation for that.

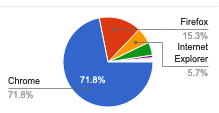

On running the above test, I see the following type of results in the Applitools dashboard:

There are 6 tests, with 10 visual rendering differences found in 5m 33 seconds.

Cross-Browser Testing

We saw above the need for me to run my tests (either manually, or in some automated form) across a set of browsers.

This Cross-Browser Testing strategy aspect is critical in the Product Test Strategy. Why is this essential you ask? Well, your users can be on any of the browsers (versions) / operating systems, and for that matter, in this age of cheap mobile data plans, on mobile-based browsers as well. Hence you need to ensure your functionality works fine in all of these combinations.

The Cross-Browser Testing strategy prioritizes the Browser / OS / ViewPort-size (referred to as a combination) the automated tests should be executed – which would simulate the majority of the most important combinations of your users. This strategy has a big impact on the infrastructure requirements, test execution & feedback time, time required for analysis and investigation of the test results.

This means that if your tests take 1 hour to run on one combination, and if you have 10 combinations to test, the time taken for test execution & getting feedback increases to 10 hours.

You may be able to speed up the test execution time by running them in parallel, but still, you need to –

- Manage/provision the relevant infrastructure, etc.

- Added effort to ensure your functional tests run correctly in each combination and viewport size as well

- Additional time required to analyze the test results for all these combinations is not a trivial effort

I achieved this by running my tests on a local Selenium Grid.

See the sample test here.

These tests took over 5m when running on Selenium Grid on Chrome & Firefox:

Note that I could have used any of the cloud solutions as well for this part and results would have been similar.

BUT – do you really need to do this?

Unless there is some custom implementation for different browsers (ex: drop-down controls, etc.), all the above effort may not really be worth it – since once validated that the functionality, if working in 1 combination, WILL also run correctly in the remaining combinations.

In my case, there is no functionality. Look and feel is the critical aspect that is making me run my tests in various different browsers.

If you really think about the need for cross-browser testing, how often do you see issues in functionality because of the different browsers?

In my experience, the aspect that indeed is different in these cases is not the functionality, but how is the user-experience / visual rendering / look-and-feel of your site in these different combinations.

Applitools Ultrafast Grid

There is clearly a use-case requiring cross-browser testing – to validate the aspect of look-and-feel/user experience in all the supported combinations. This is where I am very excited to share with you Applitools Ultrafast Grid.

Wouldn’t it be amazing IF we can execute our functional tests just once to ensure our features are working correctly, AND, magically do the look-and-feel validations for ALL the supported combinations automatically? This is exactly what the Applitools Ultrafast Grid does.

From Applitools website –

With Applitools Eyes in your functional automation, in a test, every eyes.checkWindow() call will do a visual validation of the screen/page/element in question against the baseline.

With the Applitools Ultrafast Grid, in a test, every eyes.checkWindow() command will do a visual validation of the screen/page/element in question FOR ALL THE COMBINATIONS YOU MENTION. So the only time that is added, is to do additional visual validation in the combinations. This happens very fast as it is done in parallel in the Applitools Grid in the cloud.

Another key aspect of this Applitools Ultrafast Grid is that it does not matter where your functional test has run – it could be the developer machine, QA machine or your CI machine (in your data center/server room, or running in some cloud machine). The AI-based Visual Validation for your specified combinations is happening in the Applitools cloud and results are sent back to wherever the test ran from.

The time saved in this approach is significant.

- Functional tests are executed only in 1 combination. So if your tests took 1 hour to run for 1 combination, and there are 10 combinations you have, then you have saved 9 hours of test execution time.

- Now you have visual validation results from all the supported configurations.

- You can use the different capabilities (my favorite being Group steps by similar differences and Root Cause Analysis) from the Applitools dashboard to quickly understand the nature of differences found, and take action (like – report a bug, update baselines, etc.)

This means you do not need to review the full results of your functional tests that were executed 10 times and instead only review one results + the visual differences (if any) on specific environments. This will reduce your effort in implementing tests to run across all OS + Browsers and reduce flakiness due to connectivity/timing related issues.

Visual Grid in Action

How can you start? Well, it’s very easy. Once you have integrated Applitools Eyes in your tests, you need to specify the Ultrafast Grid configuration and pass that to the tests.

See the sample test here.

Steps:

- Get Applitools account / API Key

- Write your functional test – in this example, we will write a Java / TestNG / Selenium-WebDriver based test

- Specify your Visual Grid configuration

- Do visual validations at relevant points in your test execution

See this (https://github.com/anandbagmar/ultrafastgrid) GitHub repository for a working example of how to do Functional Testing and integrate Visual Testing for all expected combinations of browsers/os/viewport sizes using Applitools Visual Grid.

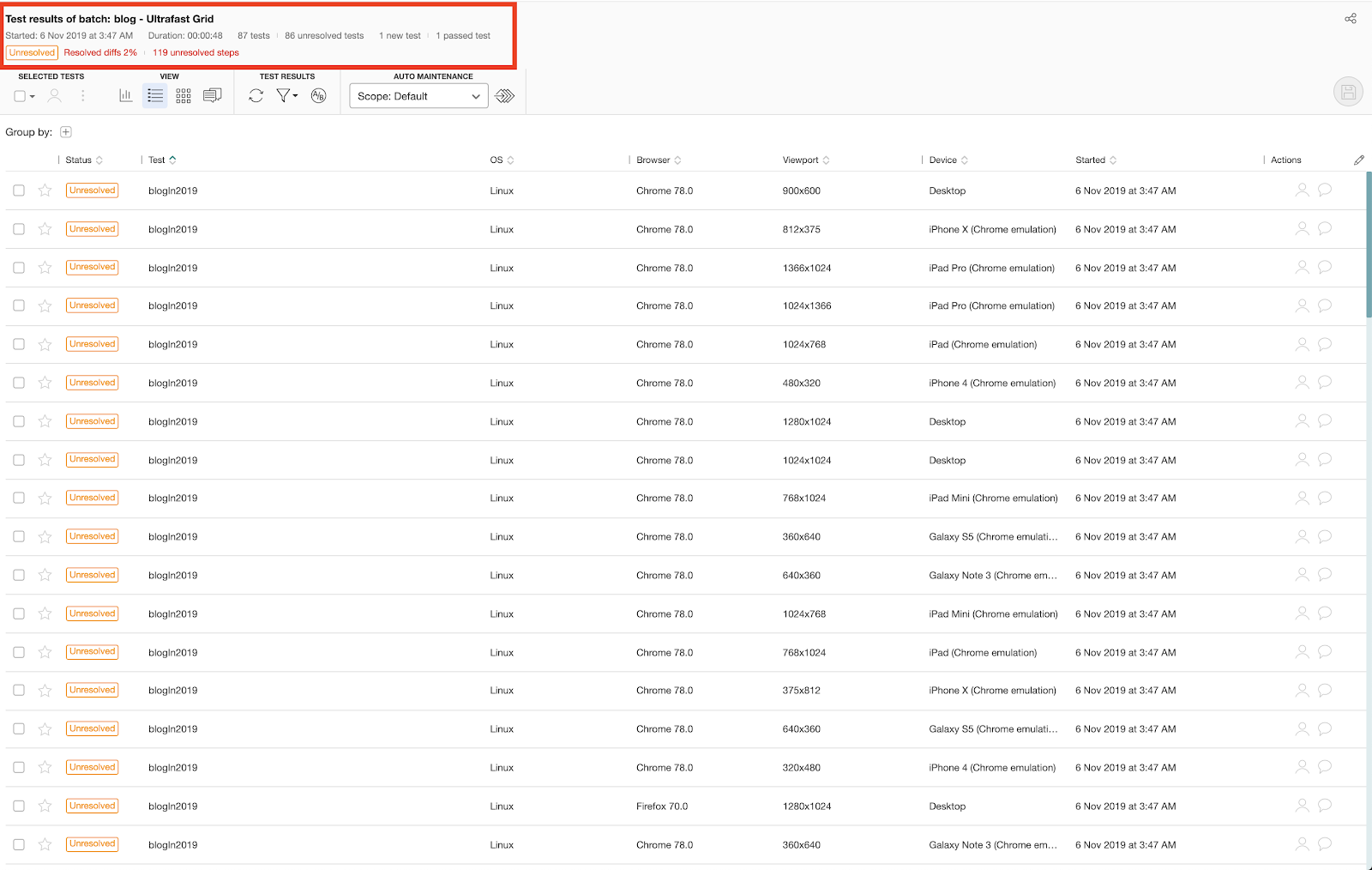

Here are the results from the Applitools dashboard for tests run locally, but with 29 browser & viewport combinations specified using the Applitools Ultrafast Grid:

There are 87 tests, with 119 visual rendering differences found in less than a minute!

Why is this a good idea?

Data helps to show the value of this approach.

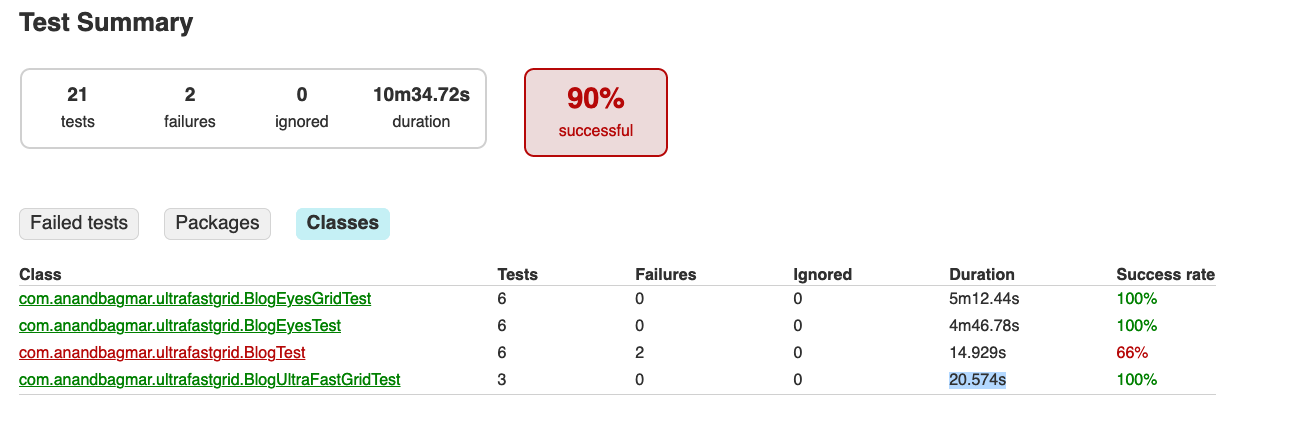

As evident from the tests in the GitHub repo (https://github.com/anandbagmar/ultrafastgrid), I ran the following types of tests:

- Run tests with Applitools integrated locally on Chrome & Firefox

- Run tests with Applitools integrated on a Selenium Grid for Chrome & Firefox setup on a local machine

- Run tests on a local machine with Applitools Ultrafast Grid configured

These tests were run thrice to ensure test result consistency.

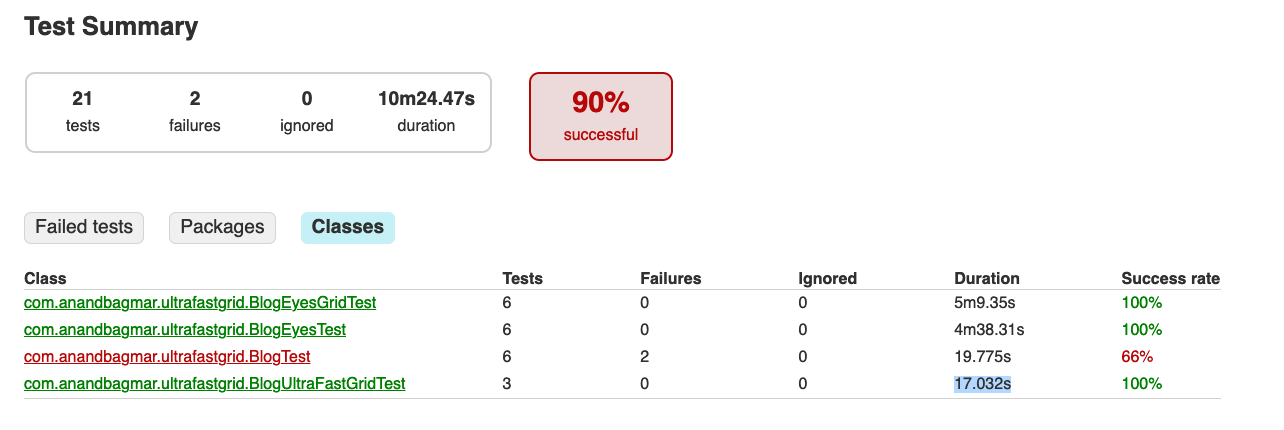

Run 1:

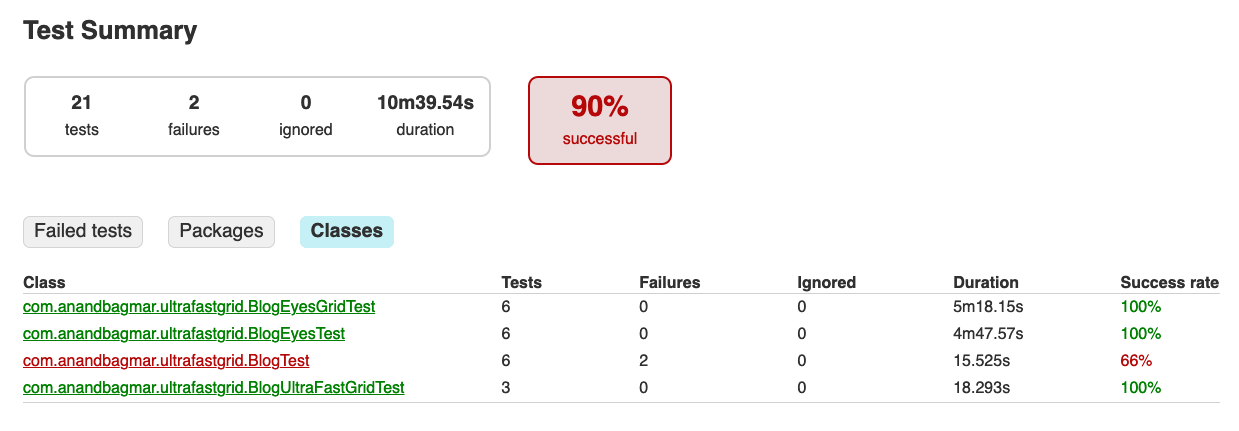

Run 2:

Run 3:

The table below summarises the time taken to run these tests in different combinations.

| Time taken | Local execution, with Applitools Eyes on Chrome & Firefox | Local execution – run in parallel using Selenium Grid on Chrome & Firefox | Local execution with Applitools Ultrafast Grid (29 combinations of browser & viewport sizes) |

|---|---|---|---|

| Number of visual differences found | 10 | 10 | 119 |

| Run 1 | 4m38.31s | 5m9.35s | 17.032s |

| Run 2 | 4m47.57s | 5m18.15s | 18.293s |

| Run 3 | 4m46.78s | 5m12.44s | 20.574s |

It is evident that the results have been staggering when using the Applitools Ultrafast Grid!

Summary

Think about your cross-browser testing strategy, and if there is no need to test the functionality on all supported browser combinations, the Applitools Ultrafast Grid is a great way to get early, fast and accurate feedback on the state of your visual rendering across all supported browser combinations.

About the Author

Anand Bagmar is a hands-on and result-oriented Software Quality Evangelist with 20+ years in the Software field. He is passionate about shipping a quality product and specializes in building automated testing tools, infrastructure, and frameworks. Anand writes testing related blogs and has built open-source tools related to Software Testing – WAAT (Web Analytics Automation Testing Framework), TaaS (for automating the integration testing in disparate systems) and TTA (Test Trend Analyzer). Follow him on Twitter, connect with him on LinkedIn, or visit his Github site: https://anandbagmar.github.io/

For More Information

- Sign up for Test Automation University

- Read all about Functional Test Myopia

- Learn how to Modernize Your Functional Testing.

- Sign up for a free Applitools account

- Request an Applitools Demo

- Visit the Applitools Tutorials